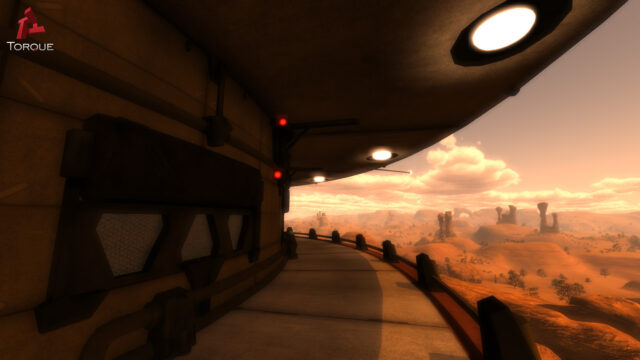

Image rendered in real-time

The new generation of game engines is out there! They are full of new rendering techniques, including classic topics like shadows, water rendering and post-processing, and also more recent techniques such as deferred shading and post-processing anti-aliasing. But there is a rendering topic that has been dormant for a few years, and at last, is being awaken:

Subsurface Scattering

Unreal Engine 3 features Subsurface Scattering (SSS), and so does CryEngine 3 and Confetti RawK. Specifically, these last two engines are using what is called Screen-Space Subsurface Scattering (update: it seems Unreal Engine 3 also uses SSSSS), an idea we devised for the first book in the GPU Pro series two years ago. Given the importance of human beings in storytelling, the inclusion of some sort of subsurface scattering simulation will be, in my opinion, the next revolution in games. Bear with me and discover why.

Let’s start the story from the beginning. What is SSS? Simply put, SSS is a mechanism of the light transport that makes light travel inside of objects. Usually, light rays are reflected from the same point where the light hits the surface. But in translucent objects, which are greatly affected by SSS, light enters the surface, scatters inside of the object, and finally exits all around of the incident point. So making a long story short, light is blurred, giving a soft look to translucent objects: wrinkles and pores are filled with light, creating a less harsh aspect; reddish gradients can be seen on the boundaries between light and shadows; and last, but not at least, light travels through thin slabs like ears or nostrils, coloring them with the bright and warm tones that we quickly associate with translucency.

Now you may be thinking, why should I care about this SSS thing? Every year, advances in computer graphics allow offline rendering to get closer to photorealism. In a similar fashion, real-time rendering is evolving, trying to squeeze every resource available to catch up with offline rendering. We all have seen how highly detailed normal maps have improved the realism of character faces. Unfortunately, the usage of such detailed maps, without further attention to light and skin interactions, inevitably leads the characters to fall into the much-dreaded uncanny valley. The next time you see a detailed face in a game, take your time to observe the pores, the wrinkles, the scars, the transition from light to shadows, the overall lighting… Then, ask yourself the following question: is what you see skin… or… maybe a piece of stone? If you manage to abstract the colors and shapes you observe, you will probably agree with me that it resembles a cold statue instead of a soft, warm and translucent face. So, it’s time to take offline SSS techniques and bring them to the real-time realm.

Some years ago, Henrik Wann Jensen and colleagues came up with a solution to the challenging diffusion theory equations, which allowed practical subsurface scattering offline renderings. His technology was used for rendering the Gollum skin in The Lord of the Rings, for which Henrik received a technical Oscar (awarded for the first time in history). This model evolved to accurately render complex multilayered materials with the work of Craig Donner and Henrik Wann Jensen, and was even translated to a GPU by Eugene d’Eon and David Luebke, who managed to obtain photorealistic skin images in real-time for the first time. Unfortunately, it took all the processing power of a GeForce 8800 GTX to render a unique head.

It was then when Screen-Space Subsurface Scattering was born, aiming to make skin rendering practical in game environments. From its multiple advantages, I would like to mention its ability to run the shader only on the visible parts of the skin, and the capability to perform calculations in screen resolution (instead of texture resolution). Subsurface scattering calculations are done at the required resolution, which can be seen as a SSS level of detail. The cost depends on the screen-space coverage: if the head is small, the cost is small; if the head is big, the cost will be bigger.

The idea is similar to deferred shading. The scene is rendered as usual, marking skin pixels in the stencil buffer. After that, skin calculations are executed in screen space for each marked skin pixel, trying to follow the shape of the surface, which is inferred from the depth buffer. This allows to render photorealistic crowds of people with minimal cost. One thing I love about our approach is that even given the amazing math complexity behind the skin rendering theory, in the end, it’s reduced to executing this simple post-processing shader only six times (three horizontally and three vertically):

float4 BlurPS(PassV2P input, uniform float2 step) : SV_TARGET {

// Gaussian weights for the six samples around the current pixel:

// -3 -2 -1 +1 +2 +3

float w[6] = { 0.006, 0.061, 0.242, 0.242, 0.061, 0.006 };

float o[6] = { -1.0, -0.6667, -0.3333, 0.3333, 0.6667, 1.0 };

// Fetch color and linear depth for current pixel:

float4 colorM = colorTex.Sample(PointSampler, input.texcoord);

float depthM = depthTex.Sample(PointSampler, input.texcoord);

// Accumulate center sample, multiplying it with its gaussian weight:

float4 colorBlurred = colorM;

colorBlurred.rgb *= 0.382;

// Calculate the step that we will use to fetch the surrounding pixels,

// where "step" is:

// step = sssStrength * gaussianWidth * pixelSize * dir

// The closer the pixel, the stronger the effect needs to be, hence

// the factor 1.0 / depthM.

float2 finalStep = colorM.a * step / depthM;

// Accumulate the other samples:

[unroll]

for (int i = 0; i < 6; i++) {

// Fetch color and depth for current sample:

float2 offset = input.texcoord + o[i] * finalStep;

float3 color = colorTex.SampleLevel(LinearSampler, offset, 0).rgb;

float depth = depthTex.SampleLevel(PointSampler, offset, 0);

// If the difference in depth is huge, we lerp color back to "colorM":

float s = min(0.0125 * correction * abs(depthM - depth), 1.0);

color = lerp(color, colorM.rgb, s);

// Accumulate:

colorBlurred.rgb += w[i] * color;

}

// The result will be alpha blended with current buffer by using specific

// RGB weights. For more details, I refer you to the GPU Pro chapter :)

return colorBlurred;

}

The crusade of rendering photorealistic skin does not end here for me. We have been researching the ability of rendering very fine skin properties, including translucency, wrinkles, or even color changes due to emotions and other conditions, which was presented in ACM SIGGRAPH Asia 2010. I will be commenting on every one of these projects, so stay tuned!

I truly believe we are getting closer and closer to overcoming the uncanny valley. In the very next future, I think we will see astonishing skin renderings in games, which will make characters more human. This will lead to stronger connections between their emotions, and those of the players, allowing storytellers to reach the gamers’ feelings and ultimately, their hearts.